Wired: How Software Engineers Actually Use AI

These survey results ring true. I thought I would use the opportunity to express my own nuanced opinions. The impulse started with this piece:

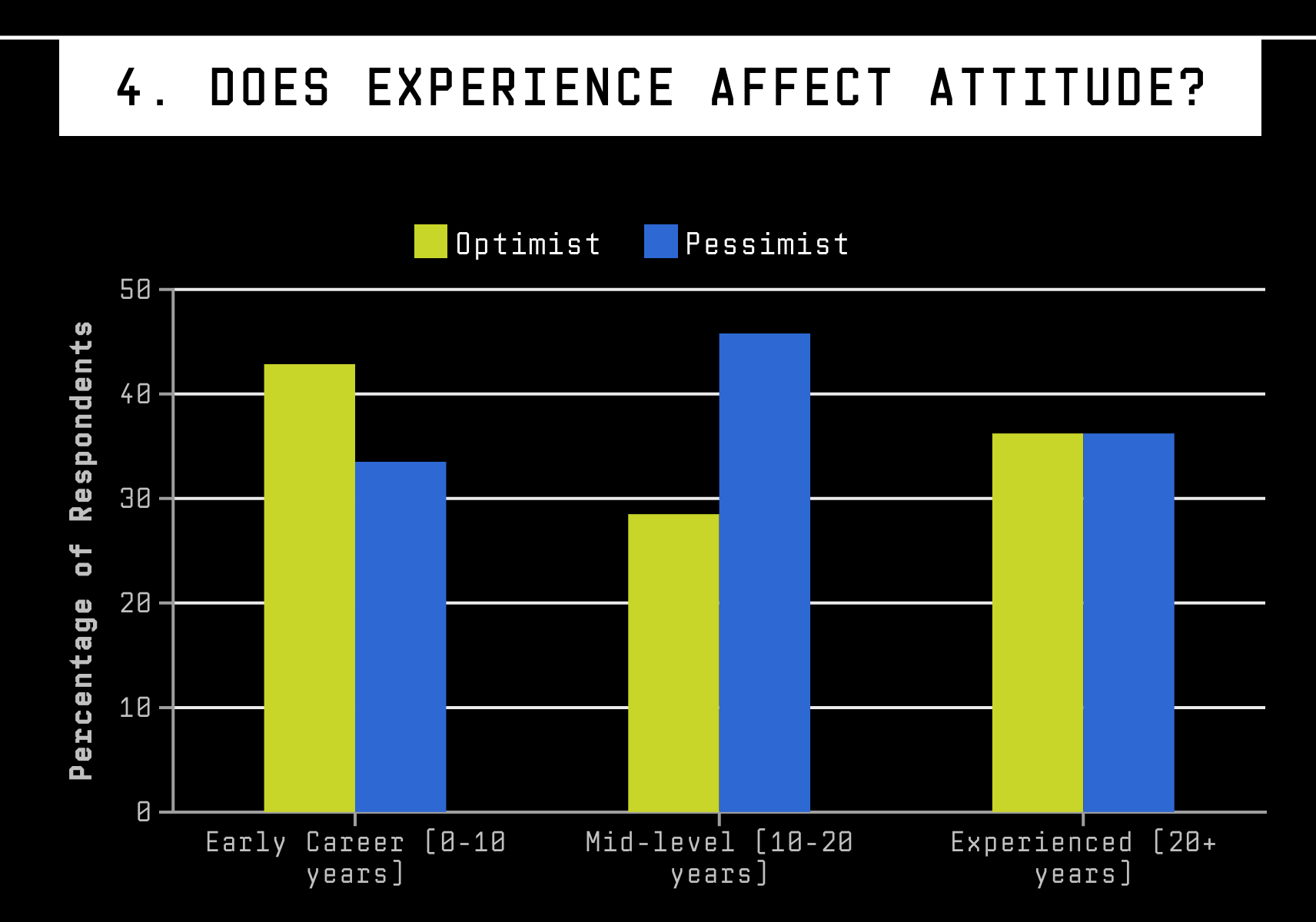

3/4 [of] early-career coders have a higher likelihood of being pro-AI. And baby coders most of all: Three-quarters of people who’ve been coding for less than a year call themselves AI optimists.

The most anti-AI group? It’s not the grizzled, late-career vets—it’s mid-career coders: Nearly half of them identify as AI pessimists. As it was analyzing these results, ChatGPT offered—unprompted—an explanation: “This group likely feels the greatest tension about AI’s impact on job security.”

This mirrors my observations and thoughts — I’m apparently in the “grizzled, late-career vets” category, and my thinking is, “We’ll see.” I’ve seen many new tech things come and go — languages, entire stacks, front-end frameworks, vague market-like things like “social media” or “social networking”…

I’m much more concerned about the economic impacts of the current AI bubble. I lived through the first dot-com bubble, and I was too young and too early in my career to appreciate its broader impacts (even though it led to the loss of my first job in the software business). The amount of capital currently being poured into what is essentially an unproven technology — at least from the perspective of proving some massive value that would justify the investment – is frankly terrifying. Cory Doctorow has written the most cogent analysis I’ve read thus far in What Kind of Bubble is AI?:

Add up all the money that users with low-stakes/fault-tolerant applications are willing to pay; combine it with all the money that risk-tolerant, high-stakes users are willing to spend; add in all the money that high-stakes users who are willing to make their products more expensive in order to keep them running are willing to spend. If that all sums up to less than it takes to keep the servers running, to acquire, clean and label new data, and to process it into new models, then that’s it for the commercial Big AI sector.

Just take one step back and look at the hype through this lens. All the big, exciting uses for AI are either low-dollar (helping kids cheat on their homework, generating stock art for bottom-feeding publications) or high-stakes and fault-intolerant (self-driving cars, radiology, hiring, etc.).

Every bubble pops eventually. When this one goes, what will be left behind?

He gets into detail after that but I will leave it to you to click through and read the rest.

Returning to the Wired survey, this anonymous quote from a respondent also resonated with me:

Upper management does not understand what AI is and thinks it will solve untold problems. Junior devs rely on it too much without understanding their code first.

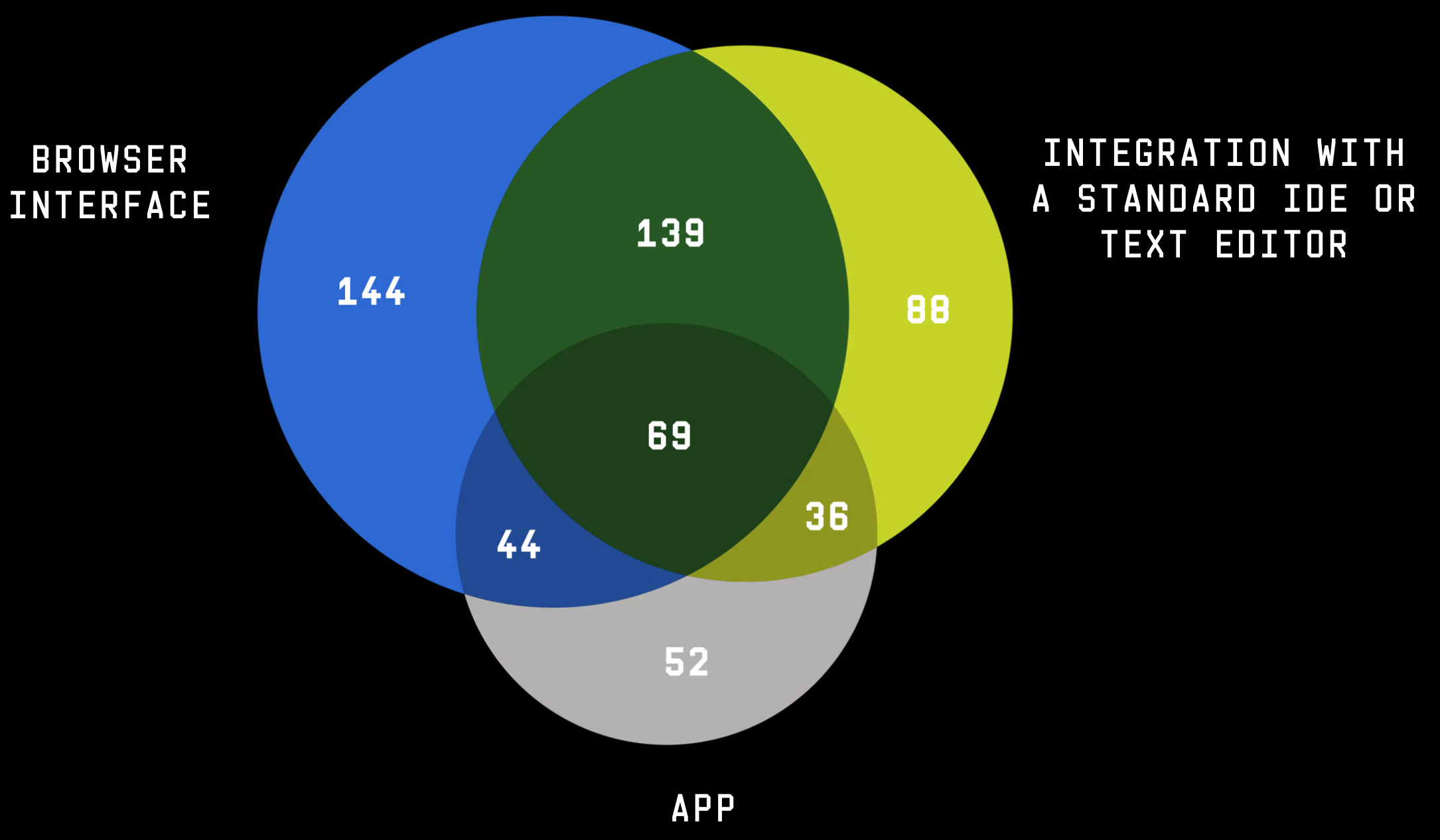

The next thing that I found interesting was about the UX modalities for AI. Other than IDEs and web apps, there’s no real killer app for AI. Chat is boring, and this is where I’d link to my never-written post The Tyranny of AI is not AI itself, it is the lazy, rushed product designers with no imagination or sense of craft.

The more experienced you are, the more you go beyond browser interfaces and apps. Nearly one in three veteran coders (20-plus years) integrate AI tools directly into their coding environments.

Not just their coding environments, but their one-off personal apps, and increasingly they are not relying on one of the big commercial model APIs, they’re hosting models on their homelab.

The very end of the Wired piece, where they quote ChatGPT’s summary, including a hallucinated quote, is interesting. I won’t quote it here. There’s enough slop in the world already.

A random pro-AI source as a coda: What Trump’s tariffs mean for the iPhone, China, and globalization, a Decoder podcast episode with Evan Smith, the cofounder and CEO of Altana, a company that makes software to track and manage the global supply chain. This guy talks about the end of the world with a level of calm I can’t muster for making breakfast.